Progress Report - November 7, 2023 (Patreon)

Content

Hello, everyone!

Sorry for this post being a day late. I wanted to get this system fully functional before I posted about it.

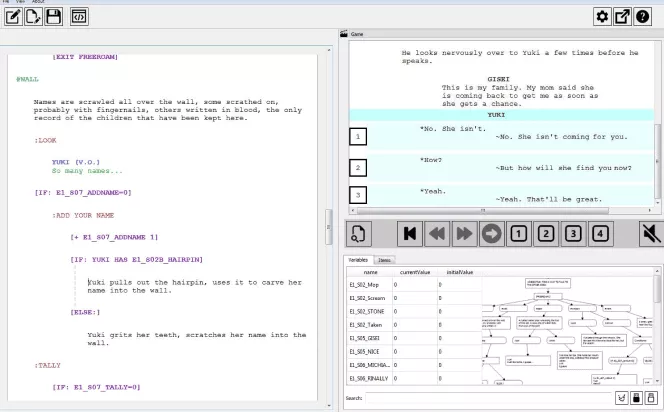

Which naturally raises the question of "what is this system?" And, maybe more pertinently... "what the fuck am I looking at?"

Last week, I put out a list of the things I need to do next for my Unreal Engine game prototype. I very pointedly put the conversation system last, because I knew it was going to be an extremely complex problem.

Naturally, the very next day I immediately began working on the conversation system first, because I knew it was going to be an extremely complex problem. I can't help it, I'm a software engineer at heart. Tackling complex problems is like catnip for me.

The Conversation System

On the Unreal side of things, I actually figured out a pretty slick way to do a conversation system. It's extremely flexible, allowing for pretty much any type of conversation flow, and for pretty much anything to happen within a conversation (minus the player having direct control of their character, anyways).

The only problem is, because I am explicitly allowing for conversations to be complex with a lot of splitting and rejoining at different points within the flow, it's extremely tedious to put together. And it's even more confusing to write and keep track of. It took me over an hour to put together the 4 Level Sequences involved in the sample conversation I put together and shared over on Twitter here. And that hour is after the writing and audio generating.

The audio generating was another pain point. I'll spare you all the details, and just say the following: "Ability to set voice pitch, ability to set speech speed, ability to save out an audio file. Choose two." I had to end up manually writing out and playing back each line of dialogue, recording them with Audacity, then trimming them and exporting them out. One by one.

That workflow is obviously not scalable. It shouldn't take me over an hour to assemble 5 sentences of dialogue. What I need is a toolset to handle all of this rigmarole: I design the flow of the conversation and the words spoken, and the tool generates the audio, creates the Level Sequences, and hooks everything up in Unreal.

And that is exactly what you're looking at.

An Existing Toolchain?

Well, more specifically, you're looking at the first tool in the chain: the graph editor.

When I started out making this toolchain, I looked around for what all existing dialogue tree tools are out there. And there are a lot - some free, many paid, many more proprietary. And that's great! Dialogue is ubiquitous in today's gaming landscape. Even Call of Duty games these days often have rudimentary dialogue systems. So it only makes sense that there's a lot of very smart people working on solving this problem.

The only problem is, at least so far as I can tell... pretty much every single one of these tools follows the screenplay model for dialogue. Even Deck Nine, who proudly boast about their Playwrite proprietary software (and how it is what convinced Square Enix to task them with making Before the Storm and True Colors), is predominantly a screenplay-model editor. Just look at this unfortunately-very-small screenshot of it from their website.

Yeah, it has a graph view... as one half of one of three panels that takes up a quarter of the screen. It is very clear that the intended use of Playwrite is to write in the screenplay mode, and just use the graph as a visual reference.

For the complexity of the dialogue trees I am imagining, though, that just isn't tenable. Having to scroll up and down through a screenplay, visually tracing out "jump to" points, as the dialog splits, converges, and splits again, is mentally taxing and a great way to develop early-onset carpal tunnel.

At least in my mind, these dialogues are very visual, represented as trees that split and branch. Huh, maybe that's why the call them "dialogue trees"... But technically speaking, they're not really trees, because you can have multiple branches all join up at the same place, which isn't something that trees do.

That technically makes them a directed graph, not a tree. It seems a minor distinction, but it has massive ramifications for the complexity of the system underneath - and the availability of tools that utilize it.

Ultimately, after much fruitless searching, I came to the conclusion that I am going to have to write this dialogue editor myself. Which, if you've been around for any amount of time, won't surprise you at all.

And so that's what I've been doing, and that's what you're seeing at the top of this post.

The Editor Toolchain

The Graph Editor

The toolchain itself is composed of three elements: the Graph Editor, the Voice Generator, and the Unreal Importer.

As I said earlier, you're looking at the first element, the Graph Editor. I'll spare you the details, and for now just say that there are (currently) two major nodes: dialogue nodes, and choice nodes. Dialogue nodes should only go to a single dialogue node, or to multiple choice nodes. Choice nodes should only go to a single dialogue node.

The editor doesn't actually enforce this right now, though it is on my to-do list. Right now it's juggling with live hand-grenades - if I fuck up, I'm liable to blow my foot off.

Eventually, there will be additional nodes, most notably the condition node. The condition node will have two functions:

If the condition node is placed before a choice node, then it will only show that choice if the condition is met. If the condition node is placed before a dialogue node, then it will only play that dialogue node if the condition is met. Once it's introduced, dialogue nodes can go to multiple condition nodes - the previous restraints still exist.

Right now, I'm not sure how I would do arbitrary conditional expressions. And honestly, I'm thinking I won't bother with them. I think I can honestly achieve pretty much any condition I want through simple inequalities (if A >= B then) and chaining conditions together.

The Graph Editor works on a per-conversation basis, wherein a set of characters with voice presets can be defined. The nodes themselves are drop-dead simple. Dialogue nodes have lines, listed in order, denoting what character speaks and what they say. Choice nodes have a directional arrow and the text that they show the player. That's it.

Saving and loading is similarly drop-dead simple: it just flattens the graph out to a list of nodes and edges for saving, and then builds the graph in loading. The Graph Editor doesn't busy itself with the implementation details. That's for the Unreal importer to manage. It just focuses on making it as easy as possible for me to tailor complicated conversations.

The Voice Generator

Remember earlier how I said "Pitch, rate, save to file: choose two?"

Well, after entirely too much Googling, and having to plunge deeper into search results than anyone honestly should ever have to, I discovered that apparently I wasn't the only person who was upset with this reality.

Enter Balabolka, a program made by a Russian programmer who was ostensibly disgruntled with the fact there is a perfectly adjustable text-to-speech system built into Windows, with no way to save the audio to file.

And so, like any disgruntled programmer, he did it himself. Balabolka is the resulting program, and if it wasn't for some obscure Russian modding forum from the early 2000s, I probably never would have found that it even existed.

Balabolka is beyond the perfect program for my needs. Not only does it provide everything I was looking for - the ability to set a TTS voice, adjust the speaking speed, adjust the voice pitch, and output to a WAV file - but it does so much more. It can automatically generate timestamps for each sentence, which means I can automate adding subtitles in Unreal. It can even, no joking, generate lip-sync data. It uses the same exact phoneme/viseme system that Valve uses, which is apparently a Disney standard, meaning that I can, at least theoretically, automatically generate lip-sync animation in Unreal for these generated lines.

And the best part is, Balabolka has a headless mode, meaning I can invoke it programmatically to batch-generate audio files.

And that looks something like this.

The Voice Generator part of the toolchain is a Python script that reads in the Graph Editor data file (the same one that the Graph Editor saves and loads), and then runs the dialogue lines through Balabolka.

The biggest challenge was, no joke, figuring out the naming convention for the audio files. I kept overthinking it, trying to maintain the structure of the entire conversation in the audio files.

If the dialogue were a simple flowing structure, then it could be done something like "01 -> 02A -> 03A; 01 -> 02B -> 03B; 01 -> 02C -> 03C". But, as I've mentioned a few times now, I intend to allow for complex conversation flow. Some conversation paths will go long with multiple choices throughout. Others will end conversations before you even get your second choice. And so a simple number-letter naming mechanism wouldn't work.

Eventually, I came to the realization that it isn't the Voice Generator's job to keep track of the dialogue structure. That's the Graph Editor's job. And so, it just doesn't. Instead, it simply writes out a complementary data file that associates individual lines of dialogue to their generated audio file. That is what that AAF file at the top is. Audio Association File.

The naming convention I settled on is ConversationName_NodeName_LineNumber_CharacterName_LinePreview, where "LinePreview" is just the first few words of the dialogue. Just so I can tell at a glance what it's supposed to be. This does unfortunately mean I have to actually name every node - I can't be lazy and just let them all be Unnamed, and just rely on my perfect memory to remember what node is what when writing the dialogue. Oh darn.

Those LRC files are automatically generated by Balabolka, and they are the timestamps, to be used in generating subtitles. I also have an option to have Balabolka generate VIS files, which are the lipsync visemes. However, that is both slow (Balabolka has to play the audio back in realtime to generate them) and I am not going to worry about implementing the lip-sync in Unreal yet. Get the system working first. Then I can add automatic lip-sync.

The Unreal Importer

This is going to be the big doozy. It's the next on my agenda.

I'll be honest with you all, if there is any point in this pipe where it all falls apart, it's going to be here. I know that you can write Unreal Editor tools. I just don't know how. I don't even know where to begin. I don't know if you can write them with Blueprints, or if you have to use C++. I don't know if you can use them to create Level Sequences, generate Blueprints for them, and hook everything up.

There's a lot of "I don't know"s involved with this part of the chain, and it's what I am going to be spending the next few days figuring out.

The goal here though is to have the Unreal Importer manage several things. Ideally, I will be able to right-click a base Level Sequence file (which has all of the actors spawned in and positioned in their idle positions), and from the drop-down menu choose an option my tool adds called "Generate Conversation From Base Sequence".

Once that is invoked, it will prompt me to choose both the Graph Editor data file, and the Voice Generator AAF file. It will then parse the Graph Editor data file, reconstructing the conversation internally. For each Dialogue and Choice node, it will then make a copy of the base Level Sequence and rename it after that node (and if it's a Choice or a Dialogue Sequence). For each generated Level Sequence, it will automatically import all of the audio files in order, using the AAF to find the correct audio files.

It will then add an event on the last frame of each Level Sequence, which will invoke the next Level Sequence. If it's Dialogue Node that points to another Dialogue Node, then it will just directly play the next Sequence. If it's a Dialogue Node that points to any number of Choice Nodes, then it will call the special Choice subroutine I've programmed in Unreal and hook up all the directions, text prompts, and resulting Level Sequences as appropriate. If it's a Choice Node, it will ignore it because those aren't actually used in the Unreal implementation, they're just an abstraction in the Graph Editor. Similarly, if it's a Condition Node (once those are implemented), it will be ignored and instead will have its condition chain sent to the Choice subroutine.

And then, if all is done properly, the entire monstrosity that I had previously spent an hour putting together, would all be done automatically in a matter of seconds. All that's left to do is the actual artistry: me going in and animating the cutscene, adding camera angles and what-not. All the technical, systemic rigmarole is done for me.

The Final Form

Of course, this current toolchain, while significantly better than doing all this shit by hand, isn't the be-all-end-all for me.

I've stated before that one of my goals for working with Unreal Engine 5 is to cut out as many middle-man programs as I can. I want to do everything directly in Unreal. Everything.

And so, ideally, I would adapt the Graph Editor to directly use Unreal's Blueprint engine somehow. Since, I mean, I basically just reinvented the Blueprint system anyways. The Voice Generator would be an extension to the Dialogue Blueprint, where I right-click on the Blueprint and simply choose "Generate Dialogue Audio".

It will then run off and invoke Balabolka behind the scenes, but I would never have to leave Unreal to do it. No external Python script I have to manually run. It would add a new asset into my Unreal project, which would be the Unreal equivalent to the AAF association file.

And then finally, I right-click on the base Level Sequence as before, choose "Generate Conversation From Base Sequence", and it prompts me to choose the Dialogue Blueprint and the Unreal AAF file. And it behaves exactly as before.

All three parts of this toolchain, all natively in Unreal.

That is the goal. But that requires an even more extensive knowledge of how to build Unreal Editor tools than what I already need to know. So, right now, an HTML Graph Editor, a Python Voice Generator, and an Unreal Importer will be perfectly sufficient for my needs.

Once I have that pipeline working, I can look to move everything into Unreal.

Get it working first. Get it working well later.

So yeah. I've done a bit more stuff than just this, mostly related to the Unreal implementation of the dialogue system. But I think I've written your eyes off already. I won't bore you with all those details.

Needless to say, I've been busy, and will continue to be busy. All good stuff, though. So far, everything has gone suspiciously well.

That's all for now, though! Until next time, everyone!